NetworKHelp

Online Training Room https://www.youtube.com/channel/UCXDXyOrYcBuQRd1EUVYAKeg?view_as=subscriber

Monday, 10 February 2025

Tuesday, 15 October 2024

|| NetworkHelp | Network Help IT Education Professional IT Training

"Network Help It Education in Ambala Cantt is one of the leading computer Training Institutes. We are affiliated with Indian Institute of Skill Development Training center. We provide more than 100 + industry-level trainings in Full Stack Web Development, HTML, CSS, Java Script, SQL, Python, C++, Java Programming, React, Node.js, PHP, CCC, CCC+

We provide AUTOCAD Training, Tally Training, Digital Marketing Courses, Computer Hardware and Networking. We provide corporate-level training in CCNA, CCNP, firewall cyber security, and ethical hacking.

Also provide Video Editing Training and much more. Network Help It Education, Established in the year 2016. This well-known establishment acts as a one-stop destination, servicing customers both local and from other parts of Ambala."

Please join us to achieve your goal in the world of information technology.

📍 Location Ambala Cantt

Address: SCO 8, 9, 10, Arya Nagar, Mahesh Nagar, Ambala Cantt, Haryana 133001

Phone: 9896777167, 09896777267

https://g.co/kgs/Q##instagram

We provide AUTOCAD Training, Tally Training, Digital Marketing Courses, Computer Hardware and Networking. We provide corporate-level training in CCNA, CCNP, firewall cyber security, and ethical hacking.

Also provide Video Editing Training and much more. Network Help It Education, Established in the year 2016. This well-known establishment acts as a one-stop destination, servicing customers both local and from other parts of Ambala."

Please join us to achieve your goal in the world of information technology.

📍 Location Ambala Cantt

Address: SCO 8, 9, 10, Arya Nagar, Mahesh Nagar, Ambala Cantt, Haryana 133001

Phone: 9896777167, 09896777267

https://g.co/kgs/Q##instagram

|| NetworkHelp | Network Help IT Education Professional IT Training

"Network Help It Education in Ambala Cantt is one of the leading computer Training Institutes. We are affiliated with Indian Institute of Skill Development Training center. We provide more than 100 + industry-level trainings in Full Stack Web Development, HTML, CSS, Java Script, SQL, Python, C++, Java Programming, React, Node.js, PHP, CCC, CCC+

We provide AUTOCAD Training, Tally Training, Digital Marketing Courses, Computer Hardware and Networking. We provide corporate-level training in CCNA, CCNP, firewall cyber security, and ethical hacking.

Also provide Video Editing Training and much more. Network Help It Education, Established in the year 2016. This well-known establishment acts as a one-stop destination, servicing customers both local and from other parts of Ambala."

Please join us to achieve your goal in the world of information technology.

📍 Location Ambala Cantt

Address: SCO 8, 9, 10, Arya Nagar, Mahesh Nagar, Ambala Cantt, Haryana 133001

Phone: 9896777167, 09896777267

https://g.co/kgs/Q##instagram

We provide AUTOCAD Training, Tally Training, Digital Marketing Courses, Computer Hardware and Networking. We provide corporate-level training in CCNA, CCNP, firewall cyber security, and ethical hacking.

Also provide Video Editing Training and much more. Network Help It Education, Established in the year 2016. This well-known establishment acts as a one-stop destination, servicing customers both local and from other parts of Ambala."

Please join us to achieve your goal in the world of information technology.

📍 Location Ambala Cantt

Address: SCO 8, 9, 10, Arya Nagar, Mahesh Nagar, Ambala Cantt, Haryana 133001

Phone: 9896777167, 09896777267

https://g.co/kgs/Q##instagram

Sunday, 4 August 2024

Friday, 3 May 2024

Tuesday, 17 October 2023

Tuesday, 8 November 2022

Monday, 3 October 2022

Tuesday, 20 September 2022

Saturday, 6 August 2022

Wednesday, 27 July 2022

Chapter 1 - What is Wireshark || NetworKHelp

Chapter- 2 Wireshark Installation and live packets captures packets || NetworKHelp

Monday, 5 July 2021

Sunday, 4 July 2021

Saturday, 7 November 2020

Sunday, 9 August 2020

Saturday, 25 July 2020

Sunday, 19 July 2020

Sunday, 10 May 2020

Monday, 27 April 2020

Wednesday, 22 April 2020

Saturday, 18 April 2020

Tuesday, 14 April 2020

CheckPoint Packet Flow

Checkpoint process the packet in the ingress and the egress using two CHAINS.Basic:

Physical layer - ingress interface

Data Link Layer/Ethernet

Inspect Driver [inspect Engine]

Data Link Layer/Ethernet

Inspect Driver [inspect Engine]

Network Layer/IP Routing

Inspect Driver

Data Link Layer/Ethernet

Physical layer - egress interface

Data Link Layer/Ethernet

Physical layer - egress interface

Advance:

1. NIC hardware

-The network card receives electrical signalling from the link partner.

2. NIC driver

-Sanity checks

-The NIC hardware decodes the signal and passes it to the operating system's NIC driver via the PCI bus

-The frame is converted to an mbuf entry and the frame headers are stored for later use.

-NIC driver hands off the data to the operating system's mbuf memory space

3. Operating system IP protocol stack

-The OS performs sanity checks on the packet

-Hand off to SXL if enabled, or to Firewall Kernel if not

4. SecureXL (if enabled)

-SXL lookup is performed, if it matches, bypass the firewall kernel and proceed with (Operating system IP protocol stack, outbound side)

5. Firewall Kernel (inbound processing)

-FW Monitor starts here (so, perhaps you need to disable secureXL [fwaccel off CAUTION] ... )

-Connection state lookups, some protocol inspection, rulebase processing, antispoofing lookups etc

-Processing order can be seen via fw ctl chain

-Bypass complex inspection if not needed

6. Complex protocol inspection (AV is an example)

-Leave the kernel and process under userland.

-Enters back at this same stage if the traffic passed

(inbound processing stops here)

(outbound processing starts here)

7. Firewall Kernel

-Route lookup

-Check Point sanity checks etc

-FW Monitor ends here

-Pass to operating system

8. Operating system IP protocol stack

-The OS performs sanity checks on the packet

-Pass the mbuf to the NIC driver for the appropriate outbound interface

9. NIC driver

-Tag the packet as an ethernet frame by adding MAC addresses for source and destination

10. NIC hardware

-The NIC hardware encodes the signal and transmits it via wire

Fig. Ctl Chain

TIP:

1. DST NAT can happen between i & I (when client side NAT enabled [DEFAULT]) or between o & O (server side NAT)

2. SRC NAT will happen immediately after o (after routing) regardless the client side NAT enabled or not.

- Static NAT - One to one translation

- Hide/Dynamic NAT - Allows you to NAT multiple IPs behind one IP/Interface

- Automatic NAT - Quick basic address NAT translation.

- Manual NAT - Allows greater flexibility over automatic NAT; it is preferred over Automatic NAT. If you are using manual rules, you must configure proxy ARPs to associate the translated IP address with the MAC address of the Security Gateway interface that is on the same network as the translated addresses.Configuration depends of OS.

- ['

Global Properties' - go to 'NAT' - go to 'Automatic NAT rules'

- Server Side NAT - destination is NAT`d by the outbound kernel

- Client Side NAT - destination is NAT`d by the inbound kernel

- ['

Global Properties' - go to 'NAT' - go to 'Manual NAT rules'

Between all the steps there are queues. These queues accumulate packets and on intervals flush them to the next step. All of this happens very very quickly in small CPU time slices.

The INSPECT engine itself is more to do specifically with protocol inspection rather than all of the other steps. INSPECT runs traffic against definitions, if the definitions match it usually means that it hit a protection and the appropriate action is to (drop, log) the traffic.

VM is used for referring firewall Virtual Machine (VM, and it is CP terminology):

i (PREIN) – inbound direction before firewall VM .

I (POSTIN) – inbound direction after firewall VM.

o (PREOUT) – outbound direction before firewall VM,

O (POSTOUT) – outbound direction after firewall VM.

Fig. fw monitor in the Chain.

TIP: Source NAT occurs after outbound inspection (o), not between (I) and (o), so if we are doing src nat we won't see (O), the same will happen if the traffic flows via a vpn.

(00000001) new processed flows

(00000002) previous processed flows

(00000003) ciphered traffic

(ffffffff) Everything

[Expert@firewall]# fw ctl chain

in chain (19):

0: -7f800000 (f27f9c20) (ffffffff) IP Options Strip (in) (ipopt_strip)

1: -7d000000 (f1ee6000) (00000003) vpn multik forward in

2: - 2000000 (f1ecad30) (00000003) vpn decrypt (vpn)

3: - 1fffff8 (f1ed7550) (00000001) l2tp inbound (l2tp)

4: - 1fffff6 (f27faff0) (00000001) Stateless verifications (in) (asm)

5: - 1fffff5 (f2c6b240) (00000001) fw multik VoIP Forwarding

6: - 1fffff2 (f1ef30b0) (00000003) vpn tagging inbound (tagging)

7: - 1fffff0 (f1ecbb20) (00000003) vpn decrypt verify (vpn_ver)

8: - 1000000 (f2849190) (00000003) SecureXL conn sync (secxl_sync)

9: 0 (f27af6b0) (00000001) fw VM inbound (fw)

10: 1 (f2812680) (00000002) wire VM inbound (wire_vm)

11: 2000000 (f1ecdff0) (00000003) vpn policy inbound (vpn_pol)

12: 10000000 (f284e9e0) (00000003) SecureXL inbound (secxl)

13: 21500000 (f3b2c940) (00000001) RTM packet in (rtm)

14: 7f600000 (f27f0910) (00000001) fw SCV inbound (scv)

15: 7f730000 (f2928620) (00000001) passive streaming (in) (pass_str)

16: 7f750000 (f2a3bbf0) (00000001) TCP streaming (in) (cpas)

17: 7f800000 (f27f9fb0) (ffffffff) IP Options Restore (in) (ipopt_res)

18: 7fb00000 (f2a07540) (00000001) HA Forwarding (ha_for)

out chain (16):

0: -7f800000 (f27f9c20) (ffffffff) IP Options Strip (out) (ipopt_strip)

1: -78000000 (f1ee5fe0) (00000003) vpn multik forward out

2: - 1ffffff (f1eccbd0) (00000003) vpn nat outbound (vpn_nat)

3: - 1fffff0 (f2a3ba70) (00000001) TCP streaming (out) (cpas)

4: - 1ffff50 (f2928620) (00000001) passive streaming (out) (pass_str)

5: - 1ff0000 (f1ef30b0) (00000003) vpn tagging outbound (tagging)

6: - 1f00000 (f27faff0) (00000001) Stateless verifications (out) (asm)

7: 0 (f27af6b0) (00000001) fw VM outbound (fw)

8: 1 (f2812680) (00000002) wire VM outbound (wire_vm)

9: 2000000 (f1ecdaa0) (00000003) vpn policy outbound (vpn_pol)

10: 10000000 (f284e9e0) (00000003) SecureXL outbound (secxl)

11: 1ffffff0 (f1ed71e0) (00000001) l2tp outbound (l2tp)

12: 20000000 (f1ecce40) (00000003) vpn encrypt (vpn)

13: 24000000 (f3b2c940) (00000001) RTM packet out (rtm)

14: 7f700000 (f2a3b810) (00000001) TCP streaming post VM (cpas)

15: 7f800000 (f27f9fb0) (ffffffff) IP Options Restore (out) (ipopt_res)

Acceleration

Performance Pack is a software acceleration product installed on Security Gateways. Performance Pack uses SecureXL technology and other innovative network acceleration techniques to deliver wire-speed performance for Security Gateways.In a SecureXL-enabled gateway, the firewall first uses the SecureXL API to query the SecureXL device and discover its capabilities. The firewall then implements a policy that determines which parts of what sessions are to be handled by the firewall, and which should be offloaded to the SecureXL device. When new sessions attempt to get established across the gateway, the first packet of each new session is inspected by the firewall to ensure that the connection is allowed by the security policy. As the packet is inspected, the firewall determines the required behavior for the session, and based on its policy it may then offload some or all of the session handling to the SecureXL device. Thereafter, the appropriate packets belonging to that session are inspected directly by the SecureXL device. The SecureXL device implements the security logic required for further analysis and handling of the traffic. If it identifies anomalies it then consults back with the firewall software and IPS engine. In addition, SecureXL provides a mode that allows for connection setup to be done entirely in the SecureXL device, thus providing extremely high session rate.

Fig. FW Paths and core processing

Medium Path is known as PXL (SXL + PSL [PSL passive Streaming Library IPS related])

Slow Path (Firewall Path) is known as F2F (Forwarded 2 Firewall)

Fwaccell (Firewall Acceleation feature) is used to check/manage the SecureXL Acceleration Device

SIM

(SecureXL implementation Device) Affinity (Association with a Core) can

be managed automatically by checkpoint (each 60 seconds) or statically

configured.

TIP: To achieve the best performance, pairs of interfaces carrying significant data flows (based on network topology) should be assigned to pairs of CPU cores on the same physical processor.

HyperThreading

SMT: New in R77. Minimices Context change inside a physical core and it may improve performance. (or, may not f.e memory used is increased and connection table size can de reduced)

It is not supported in Open Servers.

It is strongly recommended to disable Hyper-Threading in BIOS when CoreXL is enabled (on Check Point appliances this is disabled, by default). Applies to Intel processors prior to "Intel Nehalem (Core i7)", where this technology was improved (called Simultaneous Multi-Threading, or Intel® Hyper-Threading)

Multicore

Multi Core processing in Checkpoint is known as CoreXL. Thre are some limitations when CoreXL is enabled.

The following features/settings are not supported in CoreXL:

TIP: To achieve the best performance, pairs of interfaces carrying significant data flows (based on network topology) should be assigned to pairs of CPU cores on the same physical processor.

- Active Streaming (CPAS) - Technology that sends streams of data to be inspected in the kernel, since more than a single packet at a time is needed in order to understand the application that is running (such as HTTP data). Active Streaming is Read- and Write-enabled, and works as a transparent proxy. Connections that pass through Active Streaming can not be accelerated by SecureXL.

- Passive Streaming - Technology that sends streams of data to be inspected in the kernel, since more than a single packet at a time is needed in order to understand the application that is running (such as HTTP data). Passive Streaming is Read-only and it cannot hold packets, but the connections are accelerated by SecureXL.

- Passive Streaming Library (PSL) - IPS infrastructure, which transparently listens to TCP traffic as network packets, and rebuilds the TCP stream out of these packets. Passive Streaming can listen to all TCP traffic, but process only the data packets, which belong to a previously registered connection. For more details, refer to sk95193 (ATRG: IPS).

- PXL - Technology name for combination of SecureXL and PSL.

- QXL - Technology name for combination of SecureXL and QoS (R77.10 and above).

- F2F / F2Fed - Packets that can not be accelerated by SecureXL (refer to sk32578 (SecureXL Mechanism)) are Forwarded to Firewall.

- F2P - Forward to PSL/Applications. Feature that allows to perform the PSL processing on the CPU cores, which are dedicated to the Firewall.

HyperThreading

SMT: New in R77. Minimices Context change inside a physical core and it may improve performance. (or, may not f.e memory used is increased and connection table size can de reduced)

It is not supported in Open Servers.

It is strongly recommended to disable Hyper-Threading in BIOS when CoreXL is enabled (on Check Point appliances this is disabled, by default). Applies to Intel processors prior to "Intel Nehalem (Core i7)", where this technology was improved (called Simultaneous Multi-Threading, or Intel® Hyper-Threading)

Multicore

Multi Core processing in Checkpoint is known as CoreXL. Thre are some limitations when CoreXL is enabled.

The following features/settings are not supported in CoreXL:

- Check Point QoS (Quality of Service)(1)

- 'Traffic View' in SmartView Monitor(2) (all other views are available)

- Route-based VPN

- IP Pool NAT(3) (refer to sk76800)

- IPv6(4)

- Firewall-1 GX

- Overlapping NAT

- SMTP Resource(3)

- VPN Traditional Mode (refer to VPN Administration Guide - Appendix B for converting a Traditional policy to a Community-Based policy)

If any of the above features/settings is enabled/configured in

SmartDashboard, then CoreXL acceleration will be automatically disabled

on the Gateway (while CoreXL is still enabled). In order to preserve

consistent configuration, before enabling one of the unsupported

features, deactivate CoreXL via '

Notes:cpconfig' menu and reboot the Gateway (in cluster setup, CoreXL should be deactivated on all members).- (1) - supported on R77.10 and above (refer to sk98229)

- (2) - supported on R75.30 and above

- (3) - supported on R75.40 and above

- (4) - supported on R75.40 and above on SecurePlatform/Gaia/Linux only

There are two main roles for CPUs applicable to SecureXL and CoreXL:

- SecureXL and CoreXL dispatcher CPU (the SND - Secure Network Distributor)You can manually configure this using the

sim affinity -scommand. (Exception: cpmq if multiqueue) - CoreXL firewall instance CPUYou can manually configure this using the

fw ctl affinitycommand

Traffic is processed by the CoreXL FW instances only when the traffic is not accelerated by SecureXL (if SecureXL is installed and enabled). So, if all the traffic is accelerated, we can have several fw instances idle.

- CoreXL will improve performance with almost linear scalability in the following scenarios:

- Much of the traffic can not be accelerated by SecureXL

- Many IPS features enabled, which disable SecureXL functionality

- Large rulebase

- NAT rules

- CoreXL will not improve performance in the following scenarios:

- SecureXL accelerates much of the traffic

- Traffic mostly consists of VPN (VPN traffic inspection occurs only in CoreXL FW instance #0)

- Traffic mostly consists of VoIP (VoIP control connections are processed in only in CoreXLFW instance #0)

In

some cases it may be advisable to change the distribution of kernel

instances, the SND, and other processes, among the processing cores.

This configuration change is known as Performance Tuning. This is

done by changing the affinities of different NICs (interfaces) and/or

processes. However, to ensure CoreXL's efficiency, all interface traffic

must be directed to cores not running kernel instances. Therefore, if

you change affinities of interfaces or other processes, you will need to

accordingly set the number of kernel instances and ensure that the

instances run on other processing cores.

Automatic Mode — (default) Affinity is determined by analysis of

the load on each NIC. If a NIC is not activated, Affinity is not set.

NIC load is analyzed every 60 seconds.

Manual Mode — Configure Affinity settings for each interface: the

processor numbers (separated by space) that handle this interface, or

all. In Manual Mode, periodic NIC analysis is disabled

The default affinity setting for all interfaces is Automatic.

Automatic affinity means that if Performance Pack is running, the

affinity for each interface is automatically reset every 60 seconds, and

balanced between available cores. If Performance Pack is not running,

the default affinities of all interfaces are with one available core. In

both cases, any processing core running a kernel instance, or defined

as the affinity for another process, is considered unavailable and will

not be set as the affinity for any interface. Poor decisions maybe done

with automatic affinity.

Figure. Possible affinity setting.

Figure. MultiCore Processing and packet flow paths.

SecureXL and CoreXL connection info exchage:

- Connection offload - Firewall kernel passes the

relevant information about the connection from Firewall Connections

Table to SecureXL Connections Table.

Note: In ClusterXL High Availability, the connections are not offloaded to SecureXL on Standby member.

- Connection notification - SecureXL passes the relevant information about the accelerated connection from SecureXL Connections Table to Firewall Connections Table.

- Partial connection - Connection that exists in the Firewall Connections Table, but not in the SecureXL Connections Table (versions R70 and above).

- In Cluster HA - partial connections are offloaded when member becomes Active

- In Cluster LS - partial connections are offloaded upon post-sync (only for NAT / VPN connections)

If a packet matched a partial connection in the outbound, then it should be dropped.

- Delayed connection - Connection created from SecureXL Connection Templates without notifying the Firewall for a predefined period of time. The notified connections are deleted by the Firewall.

Sunday, 12 April 2020

Big IP F5 Load Balancing Basic Concetps

Load Balancing

Load balancing

technology is the basis on which today’s Application Delivery

Controllers operate. But the pervasiveness of load balancing technology

does not mean it is universally understood, nor is it

typically considered from anything other than a basic, networkc

entric viewpoint. To maximize its benefits, organizations should

understand both the basics and nuances of load balancing.

By end of this Blog Session you will understand following topics.

- Introduction

- Basic Load Balancing Terminology

- Node, Host, Member, and Server

- Pool, Cluster, and Farm

- Virtual Server

- Putting It All Together

- Packet Flow Diagram

- Load Balancing Basics

- Traffic Distribution Methods

- NAT Functionality

Basic Load Balancing Terminology

Node, Host, Member, and Server

Node, Host -> Physical Server -> Ex 172.16.5.1

Member -> This is referred as service which has combination of IP address and port

Ex: 172.16.5.1:80

Server : Virtual Server (F5 Load balancer)

Pool, Cluster, and Farm

Load balancing allows organizations to distribute inbound traffic across multiple

back-end destinations. It is therefore a necessity to have the concept of a collection

of back-end destinations. Clusters, as we will refer to them herein, although also known as pools or farms, are collections of similar services available on any number of hosts. For instance, all services that offer the company web page would be collected into a cluster called “company web page” and all services that offer e-commerce services would be collected into a cluster called “e-commerce.”

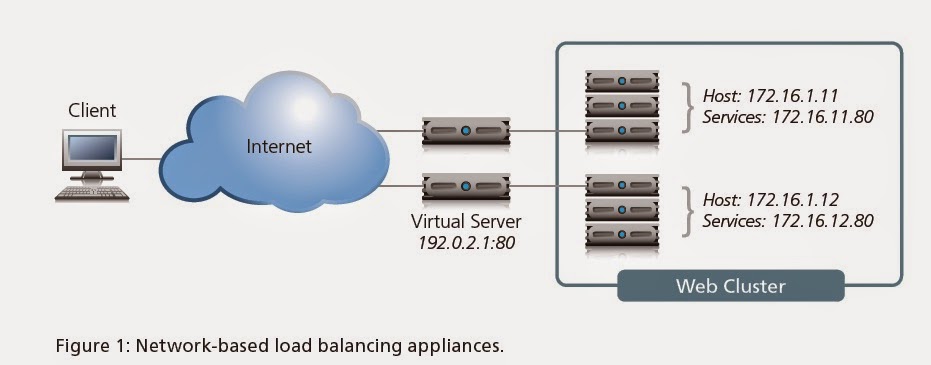

VIRTUAL SERVER:

Virtual server uses single IP address to

represent the server and listening service. Virtual server listens for the

connections initiated by the client, once it received first packet from the

client it translates the destination address from the virtual address to the

node address. The choice of the node is based on the load balancing or

persistence.

Putting It All Together

Packet Flow Diagram

Load Balancing Basics

With this common

vocabulary established, let’s examine the basic load balancing

transaction. As depicted, the load balancer will typically sit in-line

between the client and the hosts that provide the services the client

wants to use. As with most things in load balancing, this is not a rule,

but more of a best practice in a typical deployment. Let’s also assume

that the load balancer is already configured with a virtual server that

points to a cluster consisting of two service points. In this deployment

scenario, it is common for the hosts to have a return route that points

back to the load balancer so that return traffic will be processed

through it on its way back to the client.

The basic load balancing transaction is as follows:

1. The client attempts to connect with the service on the load balancer.

2. The load balancer accepts the connection, and after deciding which host

should receive the connection, changes the destination IP (and possibly port)

to match the service of the selected host (note that the source IP of the client

is not touched).

3. The host accepts the connection and responds back to the original source,

the client, via its default route, the load balancer.

4. The load balancer intercepts the return packet from the host and now changes

the source IP (and possible port) to match the virtual server IP and port, and

forwards the packet back to the client.

5. The client receives the return packet, believing that it came from the virtual

server, and continues the process.

Traffic Distribution methods :

STATIC METHOD:

Ø Round Robin: Client Requests been distributed evenly

across the available servers in the 1:1 ratio.

Ø Ratio:

The ration method distributes the load across available members in the given

proportion. (e.g) if the given proportion is 3:2:1:1 then first server will

receive the 3 packets and 2nd server will receive 2 packets and

other 2 servers will receive one packet to process.

DYNAMIC METHOD:

Fastest:

Load will

be shared across the members based on the response time. The response time has

been computed every second based on the monitoring response time and packet

response time.

Least Connections:

The least

connection mode distributes the load to the members, which has the least connection to process.

Observed:

The

observed load balancing will work based on the system performance. Performance

is the combination of the system response time and the connection count on the

system.

Predictive:

Predictive

is as similar as how observed method. Since the observed method using the

system performance of the current time, predictive method calculates the current load and the before second load.

NAT FUNCTIONALITY:

BIG-IP using NAT in three ways, following

Ø Virtual

Server

Ø Network

Address Translation

Ø Secure

Network Address Translation

VIRTUAL SERVER:

Virtual server uses single IP address to

represent the server and listening service. Virtual server listens for the

connections initiated by the client, once it received first packet from the

client it translates the destination address from the virtual address to the

node address. The choice of the node is based on the load balancing or

persistence.

SNAT:

Unlike virtual servers, SNAT never listen

for connections initiated to the SNAT address. Here when BIG-IP sees the

connection every time it translates the source address from the allowed host

address.

iRules:

iRules is the script which influences the

traffic which flows through the BIG-IP if the script matches for the traffic.

Commonly iRules are used to select the pool to process a client request. This

is technically called as a UNIVERSAL

INSPECTION ENGINE (UIE).

The Load Balancing Decision

Usually at this point, two questions arise: how does the load balancer decide which

host to send the connection to? And what happens if the selected host isn’t working?

Let’s discuss the second question first. What happens if the selected host isn’t

working? The simple answer is that it doesn’t respond to the client request and the

connection attempt eventually times out and fails. This is obviously not a preferred

circumstance, as it doesn’t ensure high availability. That’s why most load balancing

technology includes some level of health monitoring that determines whether a host

is actually available before attempting to send connections to it.

There are multiple levels of health monitoring, each with increasing granularity and focus. A basic monitor would simply PING the host itself. If the host does not respond to PING, it is a good assumption that any services defined on the host are probably down and should be removed from the cluster of available services. Unfortunately, even if the host responds to PING, it doesn’t necessarily mean the service itself is working. Therefore most devices can do “service PINGs” of some kind, ranging from simple TCP connections all the way to interacting with the application via a scripted or intelligent interaction. These higher-level health monitors not only provide greater confidence in the availability of the actual services (as opposed to the host), but they also allow the load balancer to differentiate between multiple services on a single host. The load balancer understands that while one service might be unavailable, other services on the same host might be working just fine and should still be considered as valid destinations for user traffic.

This brings us back to the first question: How does the load balancer decide which host to send a connection request to? Each virtual server has a specific dedicated cluster of services (listing the hosts that offer that service) which makes up the list of possibilities. Additionally, the health monitoring modifies that list to make a list of “currently available” hosts that provide the indicated service. It is this modified list from which the load balancer chooses the host that will receive a new connection. Deciding the exact host depends on the load balancing algorithm associated with that particular cluster. The most common is simple round-robin where the load balancer simply goes down the list starting at the top and allocates each new connection to the next host; when it reaches the bottom of the list, it simply starts again at the top. While this is simple and very predictable, it assumes that all connections will have a similar load and duration on the back-end host, which is not always true. More advanced algorithms use things like current-connection counts, host utilization, and even real-world response times for existing traffic to the host in order to pick the most appropriate host from the available cluster services. Sufficiently advanced load balancing systems will also be able to synthesize health monitoring information with load balancing algorithms to include an understanding of service dependency. This is the case when a single host has multiple services, all of which are necessary to complete the user’s request. A common example

would be in e-commerce situations where a single host will provide both standard HTTP services (port 80) as well as HTTPS (SSL/TLS at port 443). In many of these circumstances, you don’t want a user going to a host that has one service operational, but not the other. In other words, if the HTTPS services should fail on a host, you also want that host’s HTTP service to be taken out of the cluster list of available services. This functionality is increasingly important as HTTP-like services become more differentiated with XML and scripting.

Subscribe to:

Posts (Atom)

-

Install Checkpoint Security Gateway R80.20 on VMware Workstation VMware Workstation Version : 12 PRO IP Address Details Gateway ...